please help me (kl630)

I'm going to use the yolo-x model in the kl630 product.

The work so far is as follows.

I want to set Ubuntu 20.04 with virtual-machine on my laptop, set catchcam control with putty, and then mount each other to hand over the file

In order to add the yolo-x model, the offset of the pt file was changed from 18 to 11 and optimized to onnx2onnx.py , and the conversion to nef was completed using the nef generator.

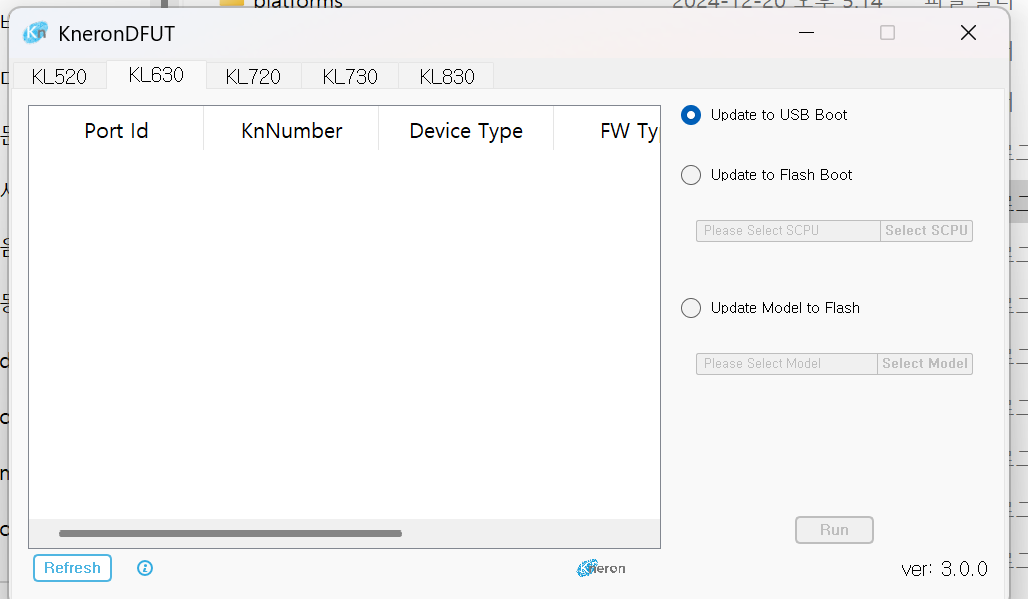

1. The next process is Kneron-PLUS, and the document says that kl630 should be Kneron-DFUT. But nothing turns on when I connect kl630. What should I do in this case?

2. In addition, there are only a few companies dealing with kl630 in Korea, so I asked one of them to receive the kl630 set in companion mode. But I didn't understand exactly what purpose the companion mode was set for, so may I ask you to explain it?

3. Finally, the yolo-x model I learned was connected to "docker run --rm -it -v /mnt/docker:/docker_mount kneron/toolchain:v0.22.0" to run the program "workspace/libs/ONNX_Converter/optimizer_scripts/editor.py " to remove unnecessary nodes, and it didn't change no matter how many times I entered the commands in the document. How do I solve this?

Comments

Hi byeongju,

Below are the answers to your questions.

1. Please first confirm that the KL630 has been switched to device mode and execute the USB companion script. For details, please refer to the KL630_96board_User_Manual, Chapter 1.9 USB Device – PC (Kneron Plus).

Additionally, there are some installation dependencies on the Host side that require attention. Please refer to the link below.

2. Companion mode primarily allows the KL630 to connect to a Host via USB for model verification. For example, as shown in the diagram below, an image is sent from the Host to the KL630, with pre/post-process handled on the Host side, while the KL630 is responsible only for providing the RAW inference output. This approach simplifies verifying whether the model performs as expected on the KL630. Further details are available in the Kneron Document Center. https://doc.kneron.com/docs/#plus_c/getting_started/

3. Regarding the issue with editor.py not working, please upgrade the toolchain to version v0.27.0 and try again.

Thanks!

Hello, thank you for your quick response.

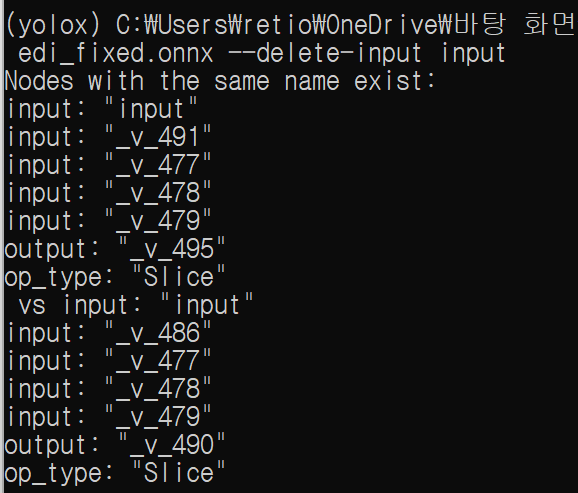

It's not anything else, you told me to set the kneron-docker to v0.27.0 as an answer to question 3, but the result is as shown in the picture below.

I also post the command and result I entered just in case, can you check what's wrong?

This is the scene that comes out when you run editor.py with 0.27.0. I learned through github that changing the python version will solve it, but it is not possible to change the python version in docker

This picture shows editor.py running up to 0.24.0, so it's running at 0.24.0. I printed out the same result as when I typed just the input and output and entered additional commands, but am I using it incorrectly?

Hi byeongju,

Could you please provide the ONNX model you are using so I can verify it on my side?

Thank you for your answer.

I just checked the problem while testing in various ways, and I even completed the conversion to nef using editor.py , onnx2onnx.py , and NEF Generator.

If there are any problems or questions in the process of moving on to the next step, I will contact you again. Thank you.

This is "host_stream.ini"

Path to the nef file is "mnt/flash/vienna/nef"

This is the result of loading the generated nef file into kl630 and transferring the nef file using telnet in cmd and executing "source etc/rc.local" in the /mnt/flash/ path.

What's the problem with the following results?

Hi byeongju,

Based on the log you provided, it seems that

rc.localwas executed repeatedly. You can renamerc.localto prevent it from running automatically during boot. This way, you can manually execute the program you want to run.