About preprocessing

Hi,

I have successfully converted a model using Kneron toolchain recently. The preprocessing of my model is 'tensorflow' as described in Kneron document. I followed the DME examples and set dme_cfg.image_formatto IMAGE_FORMAT_SUB128 | NPU_FORMAT_RGB565 | IMAGE_FORMAT_RAW_OUTPUTand the results looks fine.

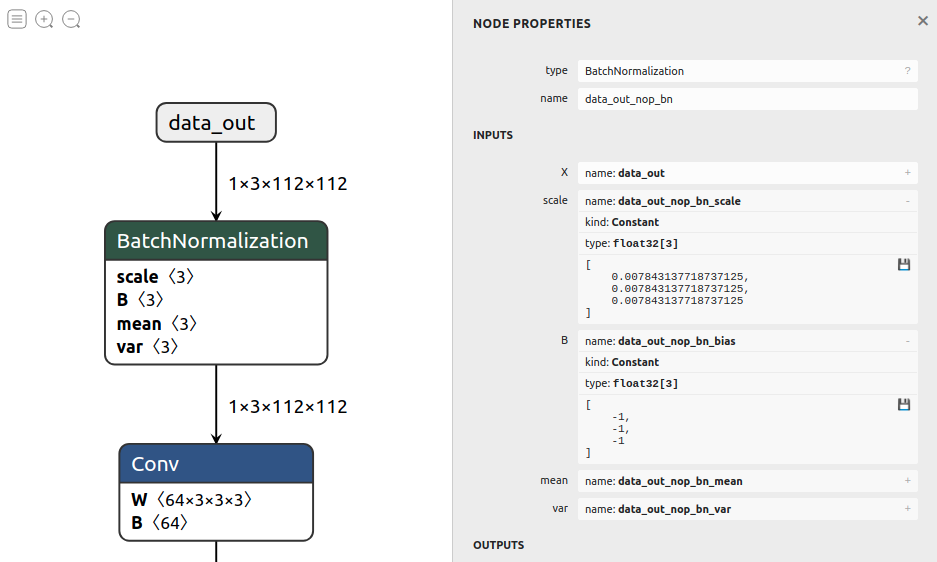

To better understand the Kneron preprocessing logic (so I can handle other model with more complex preprocessing), I setdme_cfg.image_formatto IMAGE_FORMAT_BYPASS_PRE | IMAGE_FORMAT_RAW_OUTPUTand add BatchNormalizationnode after model input node.

I found the following message when I use fpAnalyserCompilerIpevaluator_520.pyso I assume the input image should be RGBA format.

[common][warning][operation_node_gen.cc:640][AddReformatPrefix] Input format in configrgba8 is RGBA but does not match format of first layerseq8, add identical prefix conv node to handle

However, the inference results are inconsistent compared to the settings mentioned in the first paragraph. Following are my modifications:

dme_cfg:

struct kdp_dme_cfg_s dme_cfg = create_dme_cfg_struct();

dme_cfg.model_id = 1002;

dme_cfg.output_num = 1;

dme_cfg.image_col = INPUT_IMAGE_SIZE;

dme_cfg.image_row = INPUT_IMAGE_SIZE;

dme_cfg.image_ch = 4;

dme_cfg.image_format = IMAGE_FORMAT_BYPASS_PRE | IMAGE_FORMAT_RAW_OUTPUT;

data preprocessing:

cv::resize(img, img, cv::Size(INPUT_IMAGE_SIZE, INPUT_IMAGE_SIZE));

cv::cvtColor(img, img, cv::COLOR_BGR2RGBA);

Model inference:

ret = kdp_dme_inference(dev_idx, (char *)img.data, INPUT_IMAGE_SIZE * INPUT_IMAGE_SIZE * 4, &inf_size, &res_flag, (char *)&inf_res, mode, 0);

Comments

I think I find the root cause, since the model is "int8" precision on Kneron, the input data should also in the range [-128, 127].

I convert the RGBA8888 to RGBA7777(all values divided by two), and the result looks much more correct.

The warning message < [common][warning][operation_node_gen.cc:640][AddReformatPrefix] Input format in configrgba8 is RGBA but does not match format of first layerseq8, add identical prefix conv node to handle > was shown due to the operator "BatchNormalization" could not handle the input format RGBA8888 directly, so compiler would add a dummy operator "Conv" to transfer the format for "BatchNormalization". But it's just a message which described the action compiler has done, the function will not be affected.

That's because the NPU is based on a int8 structure, if the input data is over the boundary of int8, it will make some error inside the NPU.

For the tensorflow preprocess (-1~1), to change the input data from uint8(0~255) to int8(-128~127), it was recommended to subtract 128 instead of diving by two.

However, the inference results of "x - 128" are not as great as the ones using "x / 2" in my case 🙁. I think the reason is that "x - 128" shifts the data distribution while "x / 2" will keep similar distribution (however precision will loss in this case).

Sorry for the confusion. With the radix = 0 and there is a prefix layer BatchNormalization in your model, there are some correspond conditions you should modify before model inference with input x -128.

Or there is another simple way to achieve. Just modify the radix to 7 and remove the prefix layer BatchNormalization. The radix = 7 means all data will be divided by 2^7 before entering to NPU to inference. With the input "x - 128" and radix = 7, the image data would be satisfied the tensorflow's preprocess "-1 ~ 1". And remember, the customized part in img_preprocess.py should be modify still if your input is "x - 128".

I followed the simple way you suggested but cannot get correct result. I'm not sure which part I did wrong. Note that if I use this method, the hardware_validate_520.py will even failed.

The model now is:

========================

"preprocess" section in input_params.json:

========================

"img_preprocess_method": "customized",

"img_channel": "RGB",

"radix": 7,

In img_preprocess.py:

========================

if mode == 'customized':

print("customized")

y = x - 128.

return y

In my inference.cpp:

========================

dme_cfg.image_format = IMAGE_FORMAT_BYPASS_PRE | IMAGE_FORMAT_RAW_OUTPUT;

cv::Mat img = cv::imread("test.jpg");

cv::cvtColor(img, img, cv::COLOR_BGR2RGBA);

img.convertTo(img, CV_8SC3, 1.0, -128.0);

ret = kdp_dme_inference(dev_idx, (char *)img.data, buf_len, &inf_size, &res_flag, (char *)&inf_res, mode, 0);

The radix setting in input_params.json is just for simulator which is a software simulating function of Toolchain.

To set the radix = 7 in actual NPU inference, you should add the action y = y / 128 in img_process.py. And toolchain will assign the radix = 7 and process your inference input data to divided by 2^7.

So the setting of customized should be:

if mode == 'customized':

print("customized")

y = x - 128.

y /= 128

return y

Thanks, it works 😀!!