The loss of model conversion is too large

Hi,

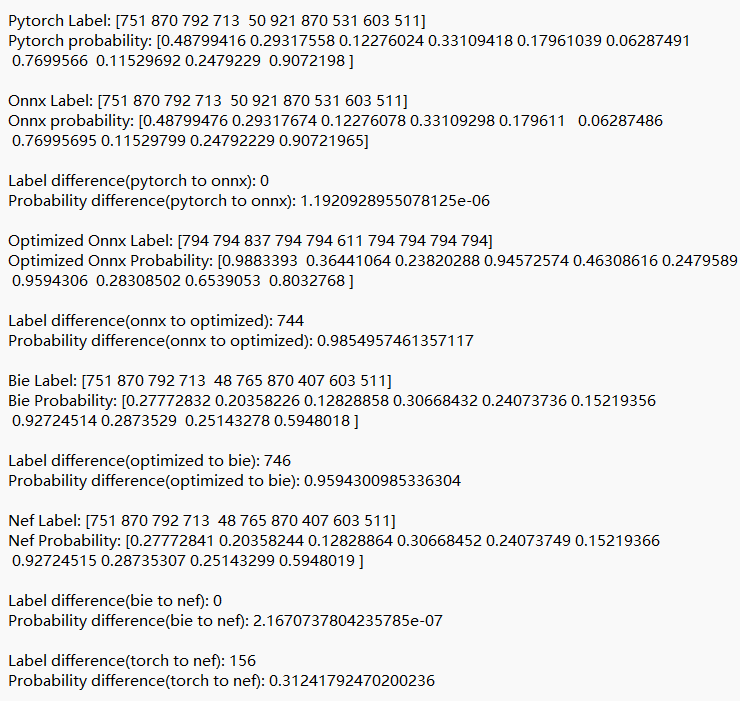

We are using KL720 to test our model. We choose an example model Mobilenet V2 as an example to test our code. Now we have fully completed the model conversion process on docker. But we found that the loss of our model in different file type is large. You can see in the picture below. I wonder is this a normal process or our code is wrong in some extend. Thank you.

The discussion has been closed due to inactivity. To continue with the topic, please feel free to post a new discussion.

Comments

Hi Jin,

Could you provide the following files so that we could check them for you? Thanks!

-Optimized onnx model

-Python script for converting your model in docker

-Input images used in the python script

-Postprocess code for optimized onnx model

-Logs of the correct results

Also, I saw that you said your docker version is 20.10.7. Could you try using the most recent docker? You can pull the latest docker by entering this command:

$ docker pull kneron/toolchain:latest

Hi,

I put all the files in a zip. We are now using toolchain v0.24.0 because our code is now not compatible with the latest version.

Besides, there is no correct log for our model. We just found out that the difference between optimized onnx and bie is so large compared to the difference between pth file and onnx file. We think the loss is too much and hope to find out how to reduce the loss.

Thank you.

Hi Jin,

Thank you for providing the files. We'll take a look at them and get back to you.

How many images did you use to quantize your onnx model into bie model? We recommend you to use around 100 images, relevant to the ones you used to train and inference. That way, your bie model would be more accurate.

Also, did you cut any nodes off when you're converting the onnx model? If so, they would need to be added back in your postprocess function.

Hi,

Because this is not our actual using model, this is an example model we want to test the process of converting models. So we only used 10 images. We will try to use 100 images later.

We didn't cut any nodes off.

Thank you!

Hi,

Since we found the difference between onnx and optimized onnx model is large, we tried to investigate the reason. Then we found out that the conversion from onnx to optimized onnx model causes no loss. Because we tried to use onnx_session.run and ort.InferenceSession to test the two onnx models' results which turned out to be no loss. Therefore, we think the loss happens after using kneron_inference function. Could you investigate why there is huge difference?

Hello,

Could you please provide a specific example to clarify where the issue is? For instance, provide an ONNX file and explain the steps you took, then describe the differences you found abnormal. These steps will help us assist you in analyzing the problem. Thanks.

Hi,

Besides using 100 images related to the ones you used in training and inferencing (the images should have variety as well) for quantization, there are other ways to debug when the onnx and bie results are greatly different:

-Check the channel ordering (NCHW, NHWC), since the output nodes could be ordered differently

-Make sure your preprocess is written in the same way as how images were processed for your model during training (including the normalization), because this would affect the accuracy

-If all the above were correct, it could be a problem in quantization. You could try adjusting the parameters in km.analysis, for example parameter "datapath_bitwidth_mode=int16" (it was originally int8), to see if the accuracy improved

In the experiment, we have an original onnx model "mobilenet_v2-b0353104.onnx", and then optimized it by kneronnxopt.optimize() and got optimized onnx model "mobilenet_v2-b0353104_opt.onnx". For these two models, we did the following:

a) original onnx model: use ort to do inference

b) optimized onnx model: use ktc.kneron_inference() to do inference

c) optimized onnx model: use ort to do inference

What we observed are as follows:

1) the results of a) and b) have huge differences

2) the results of a) and c) are same

3) the results of b) and c) have huge differences

We concluded that:

i) the model optimization is OK

ii) the output of ktc.kneron_inference() is wrong

Can you please verify whether our conclusions are correct? If it is not, any suggestions are welcomed. Thanks.

@JIN QIAN

Hi JIN QIAN,

When you use onnx ktc.inference, you can try changing input_data to a list. This should solve your problem, as shown in the following example.

You may refer to these link:

https://doc.kneron.com/docs/#toolchain/manual_3_onnx/#33-e2e-simulator-check-floating-point

https://doc.kneron.com/docs/#toolchain/appendix/app_flow_manual/#python-api-inference

Hi,

In this experiment, we compared predictions of the nef model on docker and on usb (i.e. the Kneron 720 chip), and our results show that these two predictions are very different. We have prediction codes as follows:

a) prediction of the nef model on docker: docker_nef_prediction.py. The results were saved in nef_docker_results.xlsx.

b) prediction of the nef model on usb: usb_nef_prediction.py. The results were saved in nef_usb_results.xlsx.

We have the prediction comparison codes as follows:

c) nef_results_comparison.py. The results were saved in "results_compare.xlsx".

We guess that the above difference is due to the preprocessing from codes a) and b) are different. Can you please provide any comments or suggestions on this issue?

Besides, any comments or suggestions regarding this experiments are welcomed if there are something problems in the codes. Thank you.

@JIN QIAN

Hi JIN QIAN,

I think you should check whether the preprocessing during model training and when using the Kneron Toolchain for quantization is consistent with the preprocessing during inference. It should be consistent.