Keras Model (Movinet) to nef conversion

I'm trying to convert Movinet (Keras), a video classification model, into a nef file and apply it to kneron.

https://www.tensorflow.org/hub/tutorials/movinet?hl=ko

In the case of Movinet, it is saved as an attached

hdf5 file.

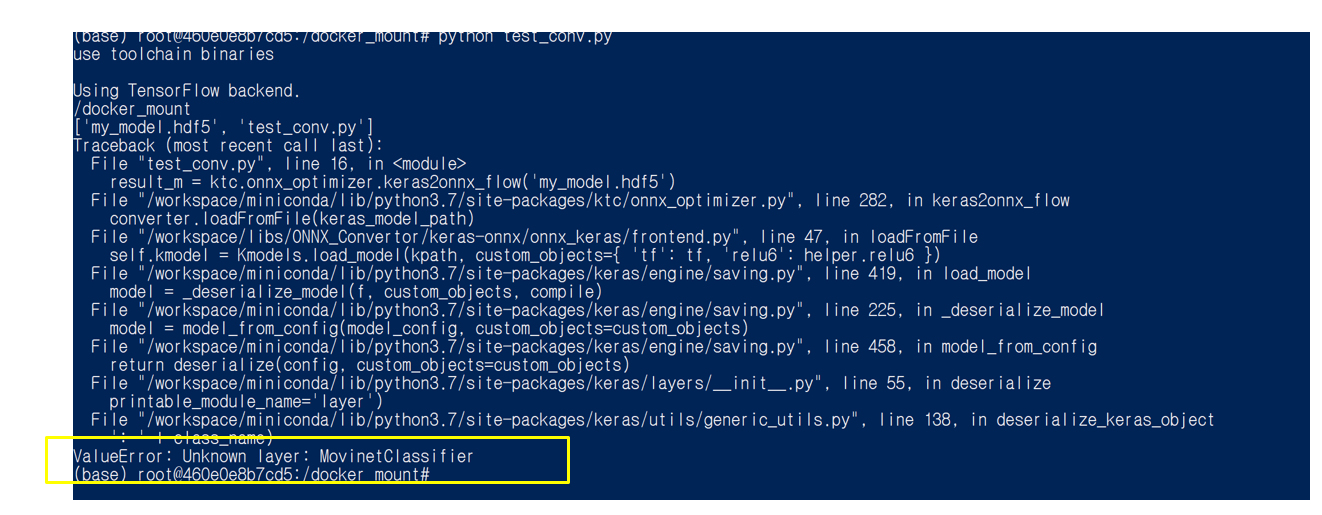

When converting the keras weihgt in the example, the following error is generated.

(base) root@460e0e8b7cd5:/docker_mount# python test_conv.py

use toolchain binaries

Using TensorFlow backend.

/docker_mount

['my_model.hdf5', 'test_conv.py']

Traceback (most recent call last):

File "test_conv.py", line 16, in <module>

result_m = ktc.onnx_optimizer.keras2onnx_flow('my_model.hdf5')

File "/workspace/miniconda/lib/python3.7/site-packages/ktc/onnx_optimizer.py", line 282, in keras2onnx_flow

converter.loadFromFile(keras_model_path)

File "/workspace/libs/ONNX_Convertor/keras-onnx/onnx_keras/frontend.py", line 47, in loadFromFile

self.kmodel = Kmodels.load_model(kpath, custom_objects={ 'tf': tf, 'relu6': helper.relu6 })

File "/workspace/miniconda/lib/python3.7/site-packages/keras/engine/saving.py", line 419, in load_model

model = _deserialize_model(f, custom_objects, compile)

File "/workspace/miniconda/lib/python3.7/site-packages/keras/engine/saving.py", line 225, in _deserialize_model

model = model_from_config(model_config, custom_objects=custom_objects)

File "/workspace/miniconda/lib/python3.7/site-packages/keras/engine/saving.py", line 458, in model_from_config

return deserialize(config, custom_objects=custom_objects)

File "/workspace/miniconda/lib/python3.7/site-packages/keras/layers/__init__.py", line 55, in deserialize

printable_module_name='layer')

File "/workspace/miniconda/lib/python3.7/site-packages/keras/utils/generic_utils.py", line 138, in deserialize_keras_object

': ' + class_name)

ValueError: Unknown layer: MovinetClassifier

I would like to ask whether conversion is possible and, if so, how to proceed.

Comments

Hi Youngjun,

We would recommend you to convert the hdf5 file into an onnx file by using a third-party program instead of keras2onnx, then continue the nef conversion flow with that onnx file.

Do you mean this kinds of the 3rd party converter?

or Could you recommend another 3rd party converter? Please let me know l.

Hi Youngjun,

Yes, you could try converting the keras model to onnx with keras2onnx.

There's also tensorflow-onnx that you could use if you could convert the keras model to tf: https://github.com/onnx/tensorflow-onnx

Thank you Maria,

As your advice, I tried to convert my weight.

But 3rd party converter (keras2onnx) makes the same error.

I think that this error is caused by difference of the version of the enviroment between docker tool chain and Movinet.

For example, tensorflow version of tool chain docker version is 1.15, but the weight of the Movinet is 2.14.

How can I solve match the environmental issue? Could you give some advice?

Hi Youngjun,

Sorry for the late reply.

Because of that, we couldn't convert your hdf5 file into onnx file, but we could convert the model on the Movinet website by the following steps:

Please note that the onnx file you'll get would have operators not supported by our NPU, so you would need to process them separately.

Dear Maria Chen,

Thank you for your kind reply.

I succesed to convert tflite to ONNX.

But, when I tried to convert ONNX to nef with following code,

import ktc

import os

import onnx

print(os.getcwd())

print(os.listdir(os.getcwd()))

km = ktc.ModelConfig(1, "0001", "630", onnx_path="/movinet.onnx")

# bin

compile_result = ktc.compile([km])

I got following errors,

(base) root@8fada36cc013:/docker_mount# python test_conv_onnx2nef.py

use toolchain binaries

Using TensorFlow backend.

/docker_mount

['movinet.onnx', 'my_model.hdf5', 'test_conv.py', 'test_conv_kreras2_onnx.py', 'test_conv_kreras2_onnx_ana.py', 'test_conv_onnx2nef.py']

ERROR:root:Analysis is required before compile.

[tool][info][batch_compile.cc:1179][main] Use compiler built by commit [8ab27a12]

[tool][info][batch_compile.cc:513][BatchCompile] compiling movinet.onnx

[common][info][compile.cc:54][LoadCfg] Loading config.

[piano][info][graph_gen.cc:27][LoadOnnxModel] Loading onnx file.

[common][error][exceptions.cc:41][KneronException] InvalidProgramInput: Cannot find input onnx

[common][info][utils.cc:354][DumpBacktrace] dump backtrace

Stack trace (most recent call last):

#10 Object "", at 0xffffffffffffffff, in

#9 Object "/workspace/libs/compiler/batch_compile", at 0x562a114e950d, in _start

BFD: DWARF error: section .debug_info is larger than its filesize! (0x93f189 vs 0x530f70)

#8 Object "/lib/x86_64-linux-gnu/libc.so.6", at 0x7fb37686e082, in __libc_start_main

#7 Source "/projects_src/kneron-piano/compiler/bin/batch_compile/batch_compile.cc", line 1182, in main [0x562a114e7045]

#6 | Source "/projects_src/kneron-piano/compiler/bin/batch_compile/batch_compile.cc", line 526, in BatchCompile

Source "/projects_src/kneron-piano/compiler/bin/batch_compile/batch_compile.cc", line 489, in (anonymous namespace)::BatchCompile(App_Args&) [0x562a114ea44a]

#5 Source "/projects_src/kneron-piano/compiler/lib/common/public/compile_impl.h", line 70, in Compile(char const*, char const*, Json::Value const&, FirmInfo*) [0x562a114f6ce5]

#4 Source "/projects_src/kneron-piano/compiler/lib/schubert/backend/compile.cc", line 272, in CompileImpl [0x7fb375b36d21]

#3 Source "/projects_src/kneron-piano/piano/lib/piano/graph/graph_gen.cc", line 154, in piano::LoadModel(char const*) [0x7fb376fcca5a]

#2 | Source "/projects_src/kneron-piano/piano/lib/piano/graph/graph_gen.cc", line 31, in LoadOnnxModel

Source "/projects_src/kneron-piano/piano/lib/piano/common/exceptions.h", line 38, in InvalidProgramInput::InvalidProgramInput(std::string const&) [0x7fb376f01258]

#1 Source "/projects_src/kneron-piano/piano/lib/piano/common/exceptions.cc", line 44, in KneronException::KneronException(ErrorCode, std::string const&) [0x7fb376f765f3]

#0 Source "/projects_src/kneron-piano/piano/lib/piano/common/utils.cc", line 356, in DumpBacktrace() [0x7fb376f99998]BFD: DWARF error: section .debug_info is larger than its filesize! (0x93f189 vs 0x530f70)

[tool][error][batch_compile.cc:528][BatchCompile] Failed to compile movinet.onnx

[tool][info][batch_compile.cc:1236][main] batch_compile complete[2]

Traceback (most recent call last):

File "test_conv_onnx2nef.py", line 22, in <module>

compile_result = ktc.compile([km])

File "/workspace/miniconda/lib/python3.7/site-packages/ktc/toolchain.py", line 407, in compile

debug=debug,

File "/workspace/miniconda/lib/python3.7/site-packages/ktc/toolchain.py", line 539, in encrypt_compile

subprocess.run(commands, check=True)

File "/workspace/miniconda/lib/python3.7/subprocess.py", line 512, in run

output=stdout, stderr=stderr)

subprocess.CalledProcessError: Command '['/workspace/libs/compiler/batch_compile', '-T', '630', '/data1/kneron_flow/batch_compile_bconfig.json', '-s', '-t', 'kneron/toolchain:v0.24.0\n', '-o']' returned non-zero exit status 2.

How can I handle this?

Thank you.

I have a mistake with onnx file path and got following errors.

Using TensorFlow backend.

/docker_mount

['movinet.onnx', 'movinet.zip', 'my_model.hdf5', 'test_conv.py', 'test_conv_kreras2_onnx.py', 'test_conv_kreras2_onnx_ana.py', 'test_conv_onnx2nef.py']

ERROR:root:Analysis is required before compile.

[tool][info][batch_compile.cc:1179][main] Use compiler built by commit [8ab27a12]

[tool][info][batch_compile.cc:513][BatchCompile] compiling movinet.onnx

[common][info][compile.cc:54][LoadCfg] Loading config.

[piano][info][graph_gen.cc:27][LoadOnnxModel] Loading onnx file.

[piano][info][graph_gen.cc:56][LoadOnnxModel] Creating graph from onnx.

[piano][debug][graph.cc:203][constructGraph] Start constructing graph.

Stack trace (most recent call last):

#12 Object "", at 0xffffffffffffffff, in

#11 Object "/workspace/libs/compiler/batch_compile", at 0x55e886a0f50d, in _start

BFD: DWARF error: section .debug_info is larger than its filesize! (0x93f189 vs 0x530f70)

#10 Object "/lib/x86_64-linux-gnu/libc.so.6", at 0x7fe69a1cd082, in __libc_start_main

#9 Source "/projects_src/kneron-piano/compiler/bin/batch_compile/batch_compile.cc", line 1182, in main [0x55e886a0d045]

#8 | Source "/projects_src/kneron-piano/compiler/bin/batch_compile/batch_compile.cc", line 526, in BatchCompile

Source "/projects_src/kneron-piano/compiler/bin/batch_compile/batch_compile.cc", line 489, in (anonymous namespace)::BatchCompile(App_Args&) [0x55e886a1044a]

#7 Source "/projects_src/kneron-piano/compiler/lib/common/public/compile_impl.h", line 70, in Compile(char const*, char const*, Json::Value const&, FirmInfo*) [0x55e886a1cce5]

#6 Source "/projects_src/kneron-piano/compiler/lib/schubert/backend/compile.cc", line 272, in CompileImpl [0x7fe699495d21]

#5 Source "/projects_src/kneron-piano/piano/lib/piano/graph/graph_gen.cc", line 154, in piano::LoadModel(char const*) [0x7fe69a92ba5a]

#4 | Source "/projects_src/kneron-piano/piano/lib/piano/graph/graph_gen.cc", line 58, in LoadOnnxModel

| Source "/usr/include/c++/9/bits/shared_ptr.h", line 718, in std::shared_ptr<Graph> std::make_shared<Graph, kneron_onnx::ModelProto&, char*&>(kneron_onnx::ModelProto&, char*&)

| 716: typedef typename std::remove_cv<_Tp>::type _Tp_nc;

| 717: return std::allocate_shared<_Tp>(std::allocator<_Tp_nc>(),

| > 718: std::forward<_Args>(__args)...);

| 719: }

| Source "/usr/include/c++/9/bits/shared_ptr.h", line 702, in std::shared_ptr<Graph> std::allocate_shared<Graph, std::allocator<Graph>, kneron_onnx::ModelProto&, char*&>(std::allocator<Graph> const&, kneron_onnx::ModelProto&, char*&)

| 700: {

| 701: return shared_ptr<_Tp>(_Sp_alloc_shared_tag<_Alloc>{__a},

| > 702: std::forward<_Args>(__args)...);

| 703: }

| Source "/usr/include/c++/9/bits/shared_ptr.h", line 359, in std::shared_ptr<Graph>::shared_ptr<std::allocator<Graph>, kneron_onnx::ModelProto&, char*&>(std::_Sp_alloc_shared_tag<std::allocator<Graph> >, kneron_onnx::ModelProto&, char*&)

| 357: template<typename _Alloc, typename... _Args>

| 358: shared_ptr(_Sp_alloc_shared_tag<_Alloc> __tag, _Args&&... __args)

| > 359: : __shared_ptr<_Tp>(__tag, std::forward<_Args>(__args)...)

| 360: { }

| Source "/usr/include/c++/9/bits/shared_ptr_base.h", line 1344, in std::__shared_ptr<Graph, (__gnu_cxx::_Lock_policy)2>::__shared_ptr<std::allocator<Graph>, kneron_onnx::ModelProto&, char*&>(std::_Sp_alloc_shared_tag<std::allocator<Graph> >, kneron_onnx::ModelProto&, char*&)

| 1342: template<typename _Alloc, typename... _Args>

| 1343: __shared_ptr(_Sp_alloc_shared_tag<_Alloc> __tag, _Args&&... __args)

| >1344: : _M_ptr(), _M_refcount(_M_ptr, __tag, std::forward<_Args>(__args)...)

| 1345: { _M_enable_shared_from_this_with(_M_ptr); }

| Source "/usr/include/c++/9/bits/shared_ptr_base.h", line 679, in std::__shared_count<(__gnu_cxx::_Lock_policy)2>::__shared_count<Graph, std::allocator<Graph>, kneron_onnx::ModelProto&, char*&>(Graph*&, std::_Sp_alloc_shared_tag<std::allocator<Graph> >, kneron_onnx::ModelProto&, char*&)

| 677: auto __guard = std::__allocate_guarded(__a2);

| 678: _Sp_cp_type* __mem = __guard.get();

| > 679: auto __pi = ::new (__mem)

| 680: _Sp_cp_type(__a._M_a, std::forward<_Args>(__args)...);

| 681: __guard = nullptr;

| Source "/usr/include/c++/9/bits/shared_ptr_base.h", line 548, in std::_Sp_counted_ptr_inplace<Graph, std::allocator<Graph>, (__gnu_cxx::_Lock_policy)2>::_Sp_counted_ptr_inplace<kneron_onnx::ModelProto&, char*&>(std::allocator<Graph>, kneron_onnx::ModelProto&, char*&)

| 546: // _GLIBCXX_RESOLVE_LIB_DEFECTS

| 547: // 2070. allocate_shared should use allocator_traits<A>::construct

| > 548: allocator_traits<_Alloc>::construct(__a, _M_ptr(),

| 549: std::forward<_Args>(__args)...); // might throw

| 550: }

| Source "/usr/include/c++/9/bits/alloc_traits.h", line 483, in void std::allocator_traits<std::allocator<Graph> >::construct<Graph, kneron_onnx::ModelProto&, char*&>(std::allocator<Graph>&, Graph*, kneron_onnx::ModelProto&, char*&)

| 481: construct(allocator_type& __a, _Up* __p, _Args&&... __args)

| 482: noexcept(std::is_nothrow_constructible<_Up, _Args...>::value)

| > 483: { __a.construct(__p, std::forward<_Args>(__args)...); }

| 484:

| 485: /**

Source "/usr/include/c++/9/ext/new_allocator.h", line 146, in void __gnu_cxx::new_allocator<Graph>::construct<Graph, kneron_onnx::ModelProto&, char*&>(Graph*, kneron_onnx::ModelProto&, char*&) [0x7fe69a92ad3e]

143: void

144: construct(_Up* __p, _Args&&... __args)

145: noexcept(std::is_nothrow_constructible<_Up, _Args...>::value)

> 146: { ::new((void *)__p) _Up(std::forward<_Args>(__args)...); }

147:

148: template<typename _Up>

149: void

#3 Source "/projects_src/kneron-piano/piano/lib/piano/graph/graph.cc", line 491, in Graph::Graph(kneron_onnx::ModelProto const&, char const*) [0x7fe69a91f208]

#2 Source "/projects_src/kneron-piano/piano/lib/piano/graph/graph.cc", line 271, in Graph::constructGraph(kneron_onnx::GraphProto const&, int, bool, char const*) [0x7fe69a91da2e]

#1 Source "/projects_src/kneron-piano/piano/lib/piano/graph/node.cc", line 150, in Node::SetupShape(kneron_onnx::ValueInfoProto const&) [0x7fe69a9343d8]

#0 | Source "/projects_src/kneron-piano/piano/lib/piano/graph/value_info.cc", line 48, in ValueInfo::ValueInfo(kneron_onnx::ValueInfoProto const&)

Source "/projects_src/kneron-piano/piano/lib/piano/onnx/onnx.proto3.pb.h", line 5710, in kneron_onnx::ValueInfoProto::type() const [0x7fe69a9c859e]

BFD: DWARF error: section .debug_info is larger than its filesize! (0x93f189 vs 0x530f70)

Segmentation fault (Address not mapped to object [0x20])

Traceback (most recent call last):

File "test_conv_onnx2nef.py", line 19, in <module>

compile_result = ktc.compile([km])

File "/workspace/miniconda/lib/python3.7/site-packages/ktc/toolchain.py", line 407, in compile

debug=debug,

File "/workspace/miniconda/lib/python3.7/site-packages/ktc/toolchain.py", line 539, in encrypt_compile

subprocess.run(commands, check=True)

File "/workspace/miniconda/lib/python3.7/subprocess.py", line 512, in run

output=stdout, stderr=stderr)

subprocess.CalledProcessError: Command '['/workspace/libs/compiler/batch_compile', '-T', '630', '/data1/kneron_flow/batch_compile_bconfig.json', '-s', '-t', 'kneron/toolchain:v0.24.0\n', '-o']' died with <Signals.SIGSEGV: 11>.

Hi Youngjun,

Your error message says: ERROR:root:Analysis is required before compile. The error is showing up because you didn't use km.analysis before you use km.compile.

Please refer to this part in the documentation center on how to convert an onnx model to NEF: 1. Toolchain Manual Overview - Document Center (kneron.com)

Hi Maria,

Thank you for your quick reply.

When I follow your instruction, I failed to pass `eval_result`.

---------------------------------------------------------------------------------------------------

km = ktc.ModelConfig(1, "0001", "630", onnx_path="./movinet.onnx")\

# Evaluate the model. The evaluation result is saved as string into `eval_result`.

eval_result = km.evaluate()

---------------------------------------------------------------------------------------------------

Is Mobinet not supported? Otherwise, how can we proceed to the next step?

Hi Youngjun,

Have you converted your onnx file to onnx format supported by using onnx2onnx_flow in the toolchain?

The model is supported as long as the operators are supported by KL630: Hardware Supported Operators - Document Center (kneron.com)

Also, please note that the onnx file you'll get would have operators not supported by our NPU, so you would need to process them separately. So you will need to use Model Editor to cut the unsupported operators and write the process separately in your preprocessing or your postprocessing to make your model work: ONNX Converter - Document Center (kneron.com)

Dear Maria,

As you stated, I converted onnx file to onnx2onnx_flow in the toolchain with this code.

-----------------------------------------------------------------------------------------------------------------------------------

import onnx

# Import the ktc package which is our Python API.

import ktc

import cv2

import tensorflow as tf

import random

import numpy as np

# 1. Load the model.

original_m = onnx.load("./movinet.onnx")

# 2. Optimize the model using optimizer for onnx model.

optimized_m = ktc.onnx_optimizer.onnx2onnx_flow(original_m)

onnx.save(optimized_m,'optimized.onnx')

# Save the onnx object optimized_m to path optimized.onnx.

km = ktc.ModelConfig(1, "0001", "630", onnx_path="./optimized.onnx")

-----------------------------------------------------------------------------------------------------------------------------------

However, I got following errors when I run onnx2onnx_flow (ktc.onnx_optimizer.onnx2onnx_flow(original_m)).

Maybe HardSwich should be modified for conversion.

I converted the tflite file to onnx under onnx 1.16.

But Tool chain onnx version is 1.07., Maybe, the difference between onnx is not compatible to conversion.

Can this be resolved by matching the tf2onnx onnx version with ktc docker?

After, I checked the version of the converted onnx file, the Opset_version is "15".

# Opset_version Check

model = onnx.load("./movinet.onnx")

opset_version = model.opset_import[0].version if len(model.opset_import) > 0 else None

print(opset_version)

Opset version of HardSwish is 14. rather than Tool chain onnx version is 1.07.

I wonder that this problem can be resolved by matching the tf2onnx onnx version with ktc docker.

Please give me advice to solve this problem.

Hi Maria,

after I loaded the onnx file in Netron (Netron).

There might be HardSwish - Optset, so I want to delete this node and change to compatible nodes in ktc.

Maybe, some information is provided in following link,

However, could you show me some example of the specific nodes to another nodes?

I want to change HardSwish to another node such as Relu or user defined node

Could you guide for this way?

I attached the graph file.

Hi Youngjun,

Our Kneron toolchain supports onnx opset 11 and 12, so you'll need to make sure all the operators match opset 11 or 12.

According to your onnx model structure, there are Hardswish nodes in the onnx model here and there, so we can't cut them and put them in postprocess function. So as you suggested, we also recommend you to change Hardswish to similar operators (Relu, Prelu, ... etc.), but since these operators aren't equal, you will need to retrain your original model (in Keras, Pytorch, Tensorflow, etc.).

Hardswish is an activation function, so you could change it to any activation function (Relu, Prelu, ... etc.) as long as you could secure the accuracy and they are supported by our NPU.

As for other operators such as Transpose, Concat, Reshape, you could test the model on IP Evaluator to see what operators are supported. If there are unsupported operators, you will need to edit out those operators, then retrain your model. You could edit your model in Keras, Pytorch, or Tensorflow, then retrain the model, and then export it to tflite or onnx.