Inference with KL720

Hi,

We have built a 3 channel TCN model and convert to nef file for KL720. The data input suppose to be np.random.rand(1, 1000, 1, 3), but KL720 always return "Error: inference failed, error = Error raised in function: generic_raw_inference_receive. Error code: 103. Description: ApiReturnCode.KP_FW_INFERENCE_TIMEOUT_103".

There are 3 questions need your help:

- Are the steps of mine code to do inference correct?

- Does the data input is not image format to cause error?

- According the question 2, should we force the data input to image format and that is the right way to use KL720?

Model information read from codes:

"""

load model from device flash

"""

model_nef_descriptor = None

try:

print('[Load Model from Flash]')

model_nef_descriptor = kp.core.load_model_from_flash(device_group=device_group)

print(' - Success')

print('[Model NEF Information]')

print(model_nef_descriptor)

except kp.ApiKPException as exception:

print('Error: load model from device flash failed, error = \'{}\''.format(str(exception)))

exit(0)

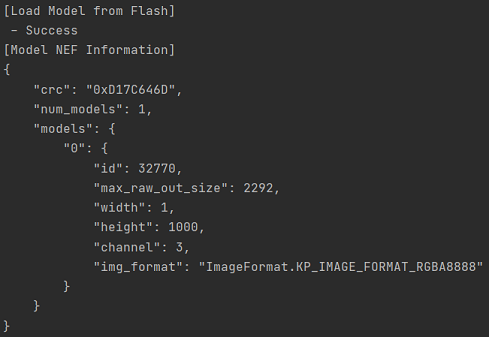

The result of model information:

And the codes to do inference:

img_buffer = np.random.rand(1, 1000, 1, 3)

LOOP_TIME = 1

"""

prepare app generic inference config

"""

generic_raw_image_header = kp.GenericRawImageHeader(

model_id=model_nef_descriptor.models[0].id,

resize_mode=kp.ResizeMode.KP_RESIZE_ENABLE,

padding_mode=kp.PaddingMode.KP_PADDING_CORNER,

normalize_mode=kp.NormalizeMode.KP_NORMALIZE_KNERON,

inference_number=0

)

"""

starting inference work

"""

print('[Starting Inference Work]')

print(' - Starting inference loop {} times'.format(LOOP_TIME))

print(' - ', end='')

for i in range(LOOP_TIME):

try:

kp.inference.generic_raw_inference_send(device_group=device_group,

generic_raw_image_header=generic_raw_image_header,

image=img_buffer,

image_format=kp.ImageFormat.KP_IMAGE_FORMAT_RGBA8888)

generic_raw_result = kp.inference.generic_raw_inference_receive(device_group=device_group,

generic_raw_image_header=generic_raw_image_header,

model_nef_descriptor=model_nef_descriptor)

except kp.ApiKPException as exception:

print(' - Error: inference failed, error = {}'.format(exception))

exit(0)

print('.', end='', flush=True)

print()

Tagged:

The discussion has been closed due to inactivity. To continue with the topic, please feel free to post a new discussion.

Comments

Hi,

We haven't yet received your response, so we try to build a 4-channel model to test. Unfortunately, KL720 still returns Error Code 103. Could you help us to solve the problem of inference?

Model information as following figure:

We follow codes of the example "KL720DemoGenericInference.py" to test:

""" prepare the image """ IMAGE_FILE_PATH = 'w1h1000.png' print('[Read Image]') img = cv2.imread(filename=IMAGE_FILE_PATH) img_buffer = cv2.cvtColor(src=img, code=cv2.COLOR_BGR2BGRA) print(img_buffer.shape) print(' - Success')LOOP_TIME = 1 """ prepare app generic inference config """ generic_raw_image_header = kp.GenericRawImageHeader( model_id=model_nef_descriptor.models[0].id, resize_mode=kp.ResizeMode.KP_RESIZE_ENABLE, padding_mode=kp.PaddingMode.KP_PADDING_CORNER, normalize_mode=kp.NormalizeMode.KP_NORMALIZE_KNERON, inference_number=0 ) """ starting inference work """ print('[Starting Inference Work]') print(' - Starting inference loop {} times'.format(LOOP_TIME)) print(' - ', end='') for i in range(LOOP_TIME): try: kp.inference.generic_raw_inference_send(device_group=device_group, generic_raw_image_header=generic_raw_image_header, image=img_buffer, image_format=kp.ImageFormat.KP_IMAGE_FORMAT_RGBA8888) generic_raw_result = kp.inference.generic_raw_inference_receive(device_group=device_group, generic_raw_image_header=generic_raw_image_header, model_nef_descriptor=model_nef_descriptor) except kp.ApiKPException as exception: print(' - Error: inference failed, error = {}'.format(exception)) exit(0) print('.', end='', flush=True) print()Hi Evelyn,

There are 3 parts you can check:

https://www.kneron.com/forum/discussion/191/rgb-24-bit-support#latest

Thanks.

Hi Otis,

1. We are not sure about what exactly "1 dimension" you mean. In examples, images are converted to BGR565 and have (H, W, 2), namely 3 dimensions. So we tried (1000, 1, 4) and (1, 1000, 4) but it didn't work. We even tested (1, 4000) and (4000, 1) just in case. To test real 1 dimension data, We also tried "generic_raw_inference_bypass_pre_proc_send", which takes bytearrays as input directly, and fed it a 4000-element array (uint8). That was surely 4000 bytes 1-d array, but didn't work, either. The 4-channel model mentioned in our comment were used for all these tests, and we even tested data read from image file like what examples do. What else could we miss?

2. Our problem is not "inference results are wrong" but "always getting timeouts when calling receive function", so permutation may be what we have to deal with if we finally have a successful inference. However, we do have two questions about your suggestion:

a) We've checked the link, but we can't understand the format mode part. In the examples data are sent as either images or bytearrays. Images are directly sent once they are converted to the target format, which means the remaining process (if any) are taken care by the library. Bytearrays can be sent if the length meets what the model needs. We double-checked the size of byte data used in the example, and it is exactly H*W*Ch. Therefore, we can't see where we should put that format mode bytes. Could you please explain it more clear?

b) According the link, RGBA8888's format mode is 13. But in the document and library, the raw value of "KP_IMAGE_FORMAT_RGBA8888" is 2 (0x2). What's the difference between these two?

Hi Evelyn,

You can replug your dongle first, and retry a 4000-element array (uint8).

Maybe due to firmware is stuck, no matter how you change settings is useless.

If it is still not working, could you please provide your nef / firmware / image for us to help you debug?

Let's figure out the problems first.

Thanks.

Hi Otis,

Could you please provide any cloud storage link for us to upload .nef file? We cannot upload it here since it's classified.

The firmware we using comes from KL720_SDK_1.4.1, the path is ../KL720_SDK_1.4.1/KL720_SDK/firmware/utils/bin_gen/flash_bin, "fw_ncpu.bin" and "fw_scpu.bin".

The image as follows, it's a 1x1000 png figure:

Hi Evelyn,

Please upload your related files to here, thanks.

https://kneron-my.sharepoint.com/:f:/g/personal/otis_hsieh_kneron_us/EkF_W8YLb99PpStAcYVS3JEB32sFOsiNgSfRqHPcHR_P6A

Thanks.

Hi Otis,

Apologies for the delay, I have to wait my boss approved the uploading. The related files have being upload to the link you provided. Please help us to debug, thanks!

Hi Evelyn,

We've tested your example, and we thought that the problem might be related to the model because it is stuck in NPU.

Could you provide your original model file (.onnx) and if there are related source about your tcn model if it is opened?

We can check the layer inside to see what's going on.

Thanks.

Hi Otis,

The .onnx file and .bie file have being upload to the link you provided. Please help us to debug, thanks!

Hi Evelyn,

We saw the onnx file and found that it seems not match with the nef file. (It seems that you updated ?)

We want to check if the nef file (random_tcn_conv2d_beam4.nef) exactly converted by the onnx file (random_optimized.onnx).

Currently, we use the onnx file to test what's going on.

Thanks.

Hi Evelyn,

We currently used the onnx file, converting it to nef file and it is OK, so you can try it.

You can use the latest version toolchain to convert the onnx to nef file, since we think the nef file you provided is not the same with the onnx file.

With the nef file converted, you can do the inference with bypass pre-proc inference, not generic inference because the channel is 5, which we seen in the layer and it is not supported in generic inference.

Furthermore, you should concern the data permutation.

According to the ioinfo.csv, your data format is 1W16C8B.

And for the normalization and preprocess, you can refer here:

Thanks.