Last Activation Layer for Classification Model

Hi,

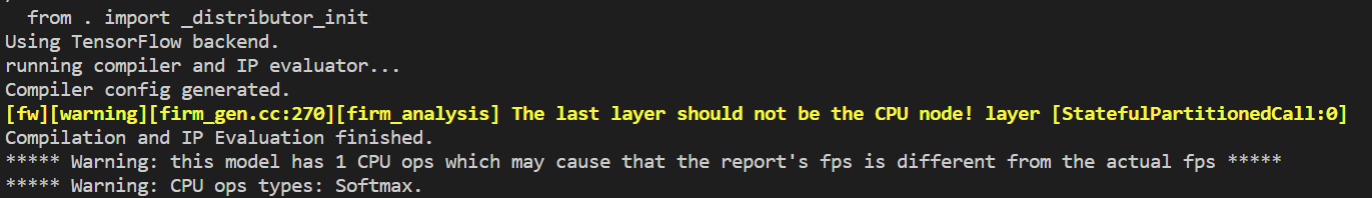

It seems that according to this table, sigmoid and softmax activation are not supported for KL520. When I used a sigmoid layer, I ended up getting an error, so I switched to softmax, which gave me only a warning.

However, I took a sample of 24 images that were correctly classified by the original tensorflow lite model, and fed into the ONNX model but it decreases the accuracy from 100% to 75%. So, what are my options here for the activation function if both sigmoid, and softmax are not compatible (tanh is not in the list either).

Also, what is the difference between CPU and NPU? How does that relate to KL520?

Thanks

Kaveen

Tagged:

The discussion has been closed due to inactivity. To continue with the topic, please feel free to post a new discussion.

Comments

@Kaveen

Hi Kaveen,

The reduced accuracy may be due to you removing the operator, but that's okay.

If you remove the unsupported operator, you can connect the unsupported operator to do the calculation after getting the result on the host side.

You can refer to: http://doc.kneron.com/docs/#520_1.5.0.0/getting_start/#6-build-new-model-binary-based-on-mobilenet-v2-image-classification (6.2. Model editing (remove softmax at the end))

The CPU is what we often call the central processing unit (https://en.wikipedia.org/wiki/Central_processing_unit)

And NPU is the abbreviation of neural processing unit, which refers to the hardware circuit, especially the neural processing network. Because NPU is a fixed hardware circuit, unlike CPU, it can be written arbitrarily, so there will be a supporting list in the toolchain to list which operators on the NPU support. But the relative processing speed of the NPU will be much faster than that of the CPU.

The KL520 is a neural network acceleration chip. This chip has ultra-low-power edge computing capabilities and high-performance neural network inference computing capabilities, so the KL520 contains NPU.