ONNX Optimization格式問題 # PaddleOCR 模型在Toolchain Docker使用問題

【問題摘要】: 如何將PaddleOCR部屬至 Kneron KL720

【問題說明】:

Paddle轉出ONNX不能於Toolchain Docker上的(onnx1.13) 執行優化(Optimization)

使用PaddeOCR官方提供paddle2onnx將模型轉換為onnx格式,轉出後ONNX相關資訊如下

ir_version: 7

opset_version: 14

備註:paddle2onnx =1.3.1 、paddle轉出onnx於附件

Paddle轉onnx官方教學

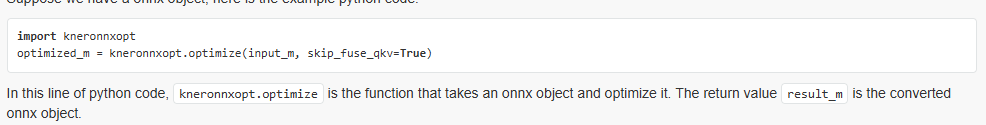

接著在Toolchain Docker於(onnx1.13)環境 要操作優化(Optimization)步驟

https://doc.kneron.com/docs/#toolchain/manual_3_onnx/

遇到以下問題

【問題1(自行解決)】

模型 Input 為動態 Shape,不支援

解法->設定shape:

```

# 讀取模型

model = onnx.load(onnx_path)

# 指定固定 shape (依實際模型修改)

fixed_shape = [1, 3, 960, 960]

# 修改輸入維度資訊

for input_tensor in model.graph.input:

for i, dim in enumerate(input_tensor.type.tensor_type.shape.dim):

dim.dim_value = fixed_shape[i]

# 儲存修改後的模型

onnx.save(model, fixed_path)

【問題2(# 瓶頸需要協助) 】->ONNX 模型中的 `Squeeze` 節點在執行時維度不符

```

Checking 0/1...

Traceback (most recent call last):

File "/workspace/onnx/convert.py", line 11, in <module>

optimized_m = kneronnxopt.optimize(input_m, skip_fuse_qkv=True)

File "/workspace/miniconda/envs/onnx1.13/lib/python3.9/site-packages/kneronnxopt/optimize.py", line 74, in optimize

model, check_ok = onnxsim.simplify(

File "/workspace/miniconda/envs/onnx1.13/lib/python3.9/site-packages/onnxsim/onnx_simplifier.py", line 209, in simplify

check_ok = model_checking.compare(

File "/workspace/miniconda/envs/onnx1.13/lib/python3.9/site-packages/onnxsim/model_checking.py", line 168, in compare

res_ori = forward(model_ori, inputs, custom_lib)

File "/workspace/miniconda/envs/onnx1.13/lib/python3.9/site-packages/onnxsim/model_checking.py", line 146, in forward

sess = rt.InferenceSession(

File "/workspace/miniconda/envs/onnx1.13/lib/python3.9/site-packages/onnxruntime/capi/onnxruntime_inference_collection.py", line 419, in __init__

self._create_inference_session(providers, provider_options, disabled_optimizers)

File "/workspace/miniconda/envs/onnx1.13/lib/python3.9/site-packages/onnxruntime/capi/onnxruntime_inference_collection.py", line 474, in _create_inference_session

sess = C.InferenceSession(session_options, self._model_bytes, False, self._read_config_from_model)

onnxruntime.capi.onnxruntime_pybind11_state.Fail: [ONNXRuntimeError] : 1 : FAIL : Node (Squeeze.6) Op (Squeeze) [ShapeInferenceError] Dimension of input 2 must be 1 instead of 20

```

Comments

您好,

這個模型看起來有Squeeze這個operator,而這個operator我們的device不支援:

請參照這個表格,並且確認模型的operators都是KL720會支援的: Hardware Supported Operators - Document Center

Dear Maria,

目前進行多次手動operator更換但仍未成功,因此想請教兩點問題

1. 轉換好的onnx檔是否可以手動替換當中的operator

2.承上,如果有是否有推薦的方式

以上再請你解惑,謝謝。

又或者有推薦可以避免operator不支援問題的OCR模型

Hi Jimmy,

Kneron的onnx optimizer應該是可以將Squeeze換成其他operators,但是這個模型的input是dynamic的,所以不支援:

請改用固定的模型input,謝謝! 還有,我們推薦您使用較新的Toolchain版本,例如v0.31.0。

Update: 您可以在跑optimize指令時使用參數來指定input shape,例如: --overwrite-input-shapes "data:1,3,224,224"

詳細資訊: Kneronnxopt - Document Center