Ultralytics Tiny YOLOv3

Hello, the Ultralytics repo is really popular and stable open-source implementation of YOLOv3. https://github.com/ultralytics/yolov3 . I've been trying to port it to Kneron but I've been having issues.

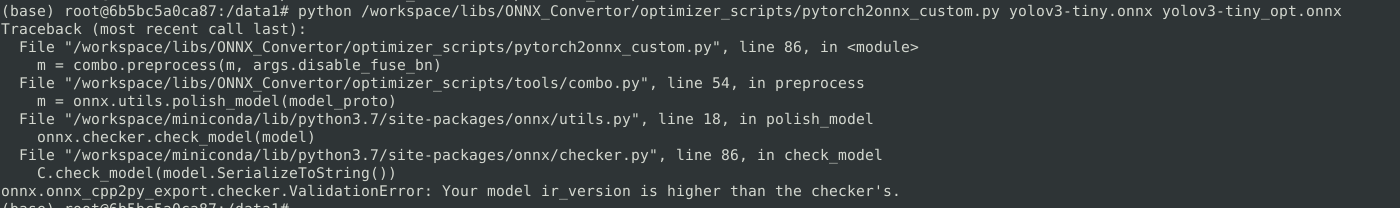

The original repo requires torch 1.8 and exports to onnx using opset 12. I've tried to modify it so that it uses torch 1.6 and exports using opset 9. I've attached the exported ONNX model. I do get the following error though from running pytorch2onnx.py. Any idea on how to solve that issue?

Thanks

Tagged:

The discussion has been closed due to inactivity. To continue with the topic, please feel free to post a new discussion.

Comments

Except the opset = 9, there are still some version condition in toolchain. Toolchain use the pytorch v1.2 and onnx version 1.4. If you want to use a higher version pytorch to export onnx file, please make sure the ir_version is 4. We will support higher version but please modify your model to fit the version condition at present.

And there are some unsupported layers in your model, please refer to the documentation and use editor.py to modify the onnx model before compiling. http://doc.kneron.com/docs/#toolchain/converters/#7-model-editor

Just want to share a tricky way to convert,

In this case,

I tried to downgrade the torch version to match the required ir version in kneron/toolchain:520_v0.12.0 ( onnx: 1.4.1 ir_version: 4),

but, I got model loading issue with low torch version such as 1.2:

so, I use version 1.7 to export model to onnx (run ./models/export.py) (make sure the flag "keep_initializers_as_inputs=True" and "opset_version=9" in torch.onnx.export)

the ir version of exported model is 6:

which will cause ir_version higher issue,

so I just change the ir_version directly:

then, we will got the onnx version with ir_version 4:

Finally, We can do pytorch_exported_onnx_preprocess.py or onnx2onnx.py and do compiling with this model successfully.

Note 1: As far as I know, all operators in this model has no version different. That's why I can do this.

Note 2: Just want to share another useful tool to do model cut: https://github.com/onnx/onnx/blob/master/docs/PythonAPIOverview.md#extracting-sub-model-with-inputs-outputs-tensor-names

Thank you, it works great!!

Hello, I would like to revisit this thread after the toolchain upgrade allowed the support for opset 11. I exported the onnx model using the "opset_version=11, keep_initializers_As_inputs=True" flags. I then optimized the resultant model using the pytorch2onnx.py script.

I have attached the resultant model. It seems that some layers do not have definite dimensions. I did not have this issue in the older toolchain. Can someone help explain what changed between the two versions?

Thanks

Hi Raef Youssef,

this is the issue comes from the changing of opset 9 to 11.

when doing "torch.onnx.export" using opset_version=11, the torch give us the model with extra branch but opset 9 not.

the extra branch should be eliminate after optimized ( constant folding ), but the current toolchain version can't do this.

we are working on it.

*And just want to share another useful tool for simplifying:

https://github.com/daquexian/onnx-simplifier

you can use this tool to simplified the model first for emergency (make sure doing "onnx2onnx.py" after onnx-simplifier)

Thanks Eric, please let me know when the toolchain is fixed. I'll also try the onnx-simplifier tool you suggested.