Run pre-processing in model head

Hi,

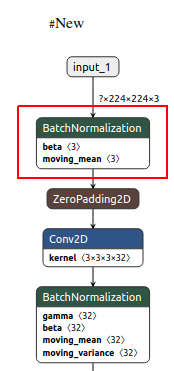

We are trying to run pre-processing in model head. We add a Batch-normalization OP in the head of our original model, here are the models:

We save these models using Keras 2.2 in .h5 format. We use different pre-processing methods and compare the inference results, here are the pre-processing respectively:

# Original

def preprocess(x):

x = x - 128 # range -128 ~ +127

x = x.astype(np.int8) # convert to signed int8

x = x.astype(np.float32)/64.0 # range -2 ~ +1.98

# New

def preprocess(x):

x = np.clip(x - 128, -128, 127)

# Their results on Python are similar.

We continue to run the New model in Kneron.

(1) Convert # New model to Kneron bin file. The batch_compile_input_params.json is attached and we show some important keys here:

"img_channel": ["RGB"]

"img_preprocess_method": ["none"]

(2) The code snippet in C:

...

dme_cfg.image_format = IMAGE_FORMAT_BYPASS_PRE | IMAGE_FORMAT_RAW_OUTPUT;

...

for(int h=0;h<face.rows;h++) {

for(int w=0;w<face.cols;w++) {

uint8_t *intensity = face.ptr(h,w);

int R = (int)intensity[0] - 128;

...

intensity[0] = (int8_t) R;

...

}

}

...

ret = kdp_dme_inference(HEAD_POSE_IDX, (char *)face.data, ...);

...

We compare the results between Python (based on New model) and Kneron (converted from New model) and found there are much differences.

We suspect there is something wrong while converting the New model, any ideas? Thanks in advance.

Comments

how do you set up the radix? Because the original model's input range is -2 to 1.98 (radix 6) but the new model's input range is -128 to 127 (radix 0)

if you didn't set up the correct radix, it will result error in quantization.

Hi, kidd:

Thanks for you reply.

# Original

@batch_compile_input_params.json

"img_preprocess_method": ["customized"],

@script/img_preprocess.py

def normalization(x, mode, **kwargs):

...

if mode == 'customized': #-123 - 123 8-0

#print("customized")

#y = (x - 127.5) * 0.0078125

#return y

return x/128

By the way, our expected pre-processing in like this:

((x/255) - 0.5)/0.2

Hi,

We found the root cause of this problem. There are differences between #Original and # New model. Some of the OPs are removed during model conversion, for example: "Batch Normalization" and "ReLu". We suspect that the converted model (a Kneron binary) is more sensitive than original model when using "Batch Normalization" OP. We run the optimized ONNX model (converted using Kneron toolchain) in python, some OPs were removed and the answer is correct but the we run the Kneron binary model based the optimized ONNX model, the answer is incorrect. "Batch Normalization" OPs were removed on both model and that's why we suspend the Kneron binary model is more sensitive. Any way, thanks for your help, we will close this issue.