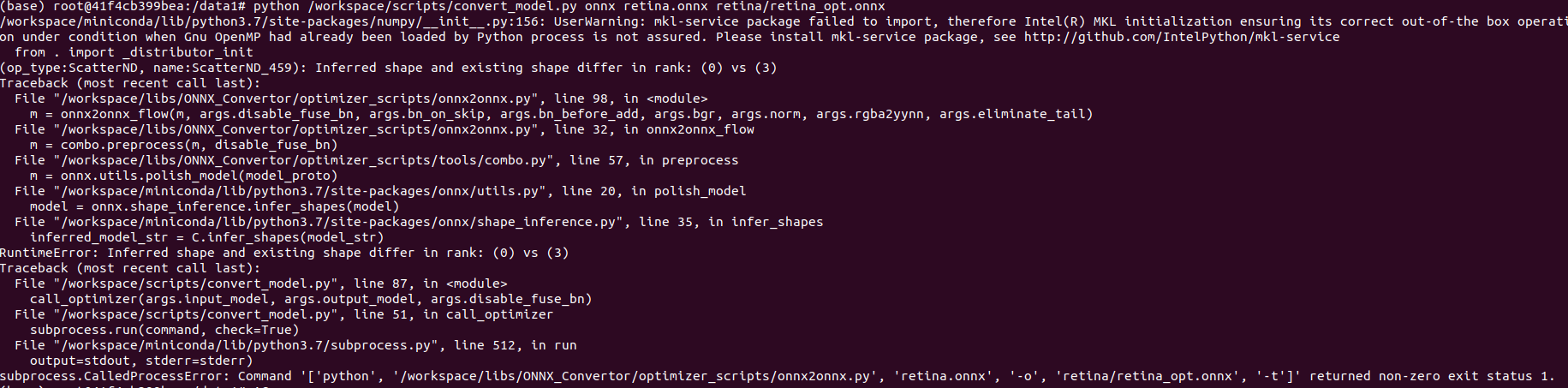

Inferred shape and existing shape differ in rank: (0) vs (3) (pytorch_exported_onnx2optimized_onnx)

I'm trying to convert a multi-task face detection network from pytorch_exported_onnx to optimized_onnx.

Model return 3 outputs, classes, bounding boxes, landmarks

If put the model on netron output data id are 913,1231,1429

I think the problem is output format.

Is there any restrict on output format? Like dimension...

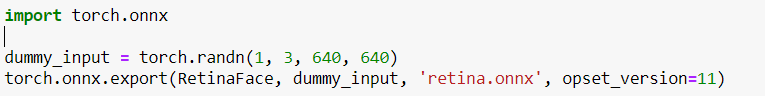

I export retinaface model like below.

Here is my model: https://drive.google.com/file/d/1eNmBzWCKuSedB8us0eXWHhNWqirNz-7i/view?usp=sharing

torch vesion :1.7.1

torchvision version: 0.8.2

The discussion has been closed due to inactivity. To continue with the topic, please feel free to post a new discussion.

Comments

Hi ChiYu,

seems that your exported onnx is invalid,

you can check your onnx with official onnx api as following:

import onnx import onnx.shape_inference m = onnx.load('/data1/retina.onnx') onnx.checker.check_model(m) onnx.shape_inference.infer_shapes(m)if it crash, that means something wrong in your onnx. you have to make sure the onnx is good.

sometimes the issue comes from bug in onnx, sometimes comes from pytorch.

I recommend you can remove the hardware unfriendly operator in your torch code directly when you export onnx.

like here:

try this, make your life easier.