Error when running FpAnalyser with tiny yolov4

Hello, I was trying to optimize my own onnx model (using onnx2onnx.py), which I couldn't make it, so I optimized the onnx model using another plataform, and then use the onnx2onnx.py.

This is the onnx model I used:

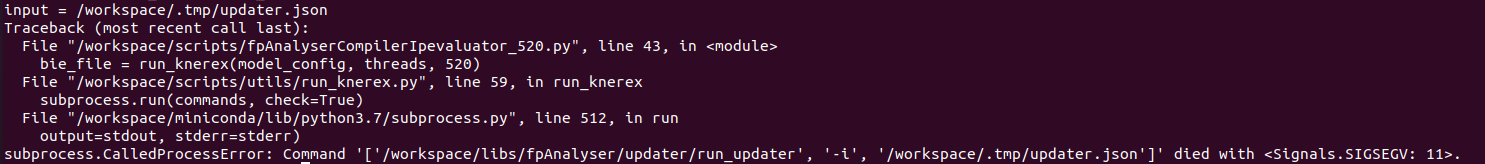

After that, I run the fpAnalyserCompilerIpevaluator_520.py script, giving the this error:

I would like to know how to solve this error,

Thanks!

Tagged:

The discussion has been closed due to inactivity. To continue with the topic, please feel free to post a new discussion.

Comments

which version of toolchain that you are using? And this is for which device? KL520 or KL720?

I think there are two issues with the ONNX.

Hi!

I'm using the latest version of toolchain, and this is for a KL520 device.

I couldn't optimized the onnx I sent you, so I used another plataform to optimized it.

Then I using the onnx2onnx.py give me this onnx.

So I'm using this onnx to continuing with the steps, but it gives me the error above.

Thanks for the reply

it looks like your optimized doesn't convert the split correctly. It creates extra branch of slice. What was your intention to split? Get the first half of the channels?

And BTW, KL520 also doesn't support slice as well.

I understand, can you help about the error when I optimize the first ONNX I sent you?:

Thank you for the reply again.

Hi francisco soto,

I saw there is "auto_pad" attribute in every convolution layer in the model you provided,

I have some experience about a bug comes from onnx builtin function due to "auto_pad" attribute, you can check my reply at the end in this post:

https://www.kneron.com/forum/discussion/49/porting-tiny-yolov3-example#latest

maybe that's why you got error message with "Inferred shape and existing shape differ",

I think that would be better if you can modified your source code and set the explicit padding value for your convolution layer.

Thank you for the answer,

I change the onnx model, so now I'm facing a new error:

Can you help me about this error?

Hi,

The latest version of toolchain just update the opset from 9 to 11, if you want to run in the latest version, please use the python script "/workspace/libs/ONNX_Convertor/optimizer_scripts/onnx1_4to1_6.py" to upgrade the opset version.

By the way, KL520 doesn't support the operators split and slice. If you want to inference model with the operators, please use KL720 instead of KL520.

Thank you about solving the error,

Can the KL520 work in a aarch64 architecture?

No, KL520 is controlled through the cortex M4 which is 32 bit architecture.

Ok, I understand.

I have the requirement that the KL520 is connected to a NanoPi Neo 4, is that possible?

Thanks.

KL520 could be used with RK3399, but the usb command of KL520 is transferred by libusb. https://github.com/libusb/libusb/releases/tag/v1.0.23

User should make sure the libusb could work on their own environment.